Calculate ReLU (Leaky) Function

Online calculator and formulas for the ReLU (Rectified Linear Unit) activation function - the modern alternative to Sigmoid

ReLU Function Calculator

Rectified Linear Unit (ReLU)

The f(x) = max(0, x) or Leaky-ReLU: f(x) = max(αx, x) is one of the most important activation functions in Deep Learning.

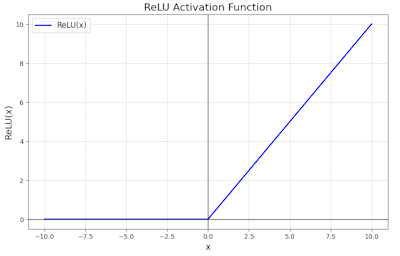

ReLU Graph

ReLU Graph: Zero for negative inputs, linear for positive values.

Leaky-ReLU: Allows small negative slope for better training.

|

|

What Makes ReLU Special?

The ReLU function revolutionized Deep Learning through its simplicity and effectiveness:

- Computationally efficient: Only a comparison, no exponential function

- Sparse activation: Many neurons can be "off" (f(x)=0)

- Strong gradients: No saturation at positive values

- Better convergence: Training is faster and more stable

- Biologically inspired: Similar to actual neuron activation

- Variations: Leaky-ReLU, ELU, GELU for special applications

ReLU Function Formulas

Standard ReLU

Simplest and fastest activation function

Leaky ReLU

Prevents issues with negative inputs

ReLU Derivative

Constant gradients (no Vanishing Gradient)

Leaky ReLU Derivative

Small slope at negative values

Parametric ReLU (PReLU)

Learnable: αᵢ is adjusted during training

ELU (Exponential Linear Unit)

Smooth function with better stability

Properties

Special Values

Domain

All real numbers

Range

Unbounded above, 0 below

Application

Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN), Modern Deep Learning architectures.

Detailed Description of the ReLU Function

Mathematical Definition

The ReLU (Rectified Linear Unit) function is one of the most widely used activation functions in modern Deep Learning. It is simple to compute, numerically stable, and leads to better convergence during training.

Using the Calculator

Enter any real number and optionally a Leaky parameter α. The calculator computes both function values and derivatives for backpropagation.

Historical Background

ReLU was popularized by Geoffrey Hinton in 2011 and led to a breakthrough in Deep Learning. Unlike Sigmoid and Tanh, ReLU enables deeper networks without Vanishing Gradient problems.

Properties and Variations

Deep Learning Applications

- Convolutional Neural Networks (CNNs) for image processing

- Recurrent Neural Networks (RNNs, LSTMs)

- Transformer and Attention mechanisms

- Generative Adversarial Networks (GANs)

ReLU Variations

- Leaky ReLU: Allows small negative values

- Parametric ReLU (PReLU): α is trainable

- ELU (Exponential Linear Unit): Smooth variant

- GELU: Gaussian Error Linear Unit (in Transformers)

Mathematical Properties

- Monotonicity: Monotonically increasing

- Non-linearity: Piecewise linear

- Sparsity: Many outputs are exactly 0

- Gradient: 0 or 1 (no Vanishing)

Interesting Facts

- ReLU enabled successful training of networks with 8+ hidden layers

- 50% of activations are typically 0 (sparsity)

- Neural networks learn faster with ReLU than with Sigmoid

- Dead ReLU Problem: Neurons can become "dead" and stop activating

Calculation Examples

Example 1: Standard ReLU

ReLU(0) = 0

ReLU(2) = 2

ReLU(-2) = 0

Example 2: Leaky ReLU (α=0.1)

f(0) = 0

f(2) = 2

f(-2) = -0.2

Example 3: Derivatives

f'(2) = 1 (steep rise)

f'(-2) = 0 (no gradient)

Leaky: f'(-2) = 0.1 (small slope)

Role in Neural Networks

Activation Function

In neural networks, the ReLU function transforms the sum of weighted inputs:

This enables non-linear decision boundaries during training and inference.

Backpropagation

The simple derivative enables efficient Gradient Descent:

No exponential function = faster and more stable!

Advantages and Disadvantages

Advantages

- Extremely fast to compute (just a comparison)

- No Vanishing Gradient problem in deep networks

- Leads to sparse activations

- Biologically realistic

- Easy to implement

Disadvantages

- Dead ReLU Problem (neurons can become "dead")

- Unbounded outputs for very large inputs

- Not differentiable at x=0

- Not centered around 0

- Requires careful weight initialization

|

|

|

|